Data-Informed, NOT Data-Driven

Hey I’m Ant and welcome to my newsletter where you will find practical lessons on building Products, Businesses and being a better Leader

You might have missed these recent posts:

- Context NOT Control

- Product Discovery Activities by Risk

- Prioritization & Urgency: Key lessons from John Cutler’s Prioritization Course

My day job is helping companies shift to a Product Operating Model.

My evening job is helping people master product management at Product Pathways.

"I can prove anything by statistics except the truth." - George Canning

Being data-driven can be an awful trap.

We believe we’re collecting data that portrays an unbiased view of the world.

Data that will help us make the ideal decision.

After all, that’s what the data says, right?

Bad news. Your data is biased.

Data-informed over data-driven!

Whether you like it or not we interject our own biases in the process.

It’s in the way we gather data (selection bias).

The way we interpret it (recency bias).

In the insights we draw (confirmation bias).

I’m sure we all know that one executive that can bend data to rationalize anything!

"If you torture the data long enough, it will confess to anything” - Ronald H. Coase

Of course, this doesn’t mean data isn’t helpful or meaningful.

But we need to be mindful of falling into the trap of being data-driven rather than informed.

Data-driven vs Data-informed

Data-driven is often used to describe letting the data do the talking.

If the data says we go left, we go left.

This mode of operating is sometimes hailed as noble. You’re taking the ego, opinions, and emotions out of the equation and relying on facts!

But the problem is this is a fallacy sold to executives by big consulting firms.

It’s dangerous to frame something subjective as objective.

Take this too far, and the company suddenly finds itself inundated with spreadsheets.

And rather than helping to inform our decisions, we’ve moved to a game of rationalization.

Riddled with confirmation bias, nothing has really changed under the surface. We’re still running on opinions, but now we have pretty numbers on a slide deck to rationalize them.

This is why we advocate for being data-informed rather than data-driven.

Use data to inform decisions. Don’t blindly take the results at face-value.

“The data is there to help you have a *conversation* - see discrepancies and surprises, help validate assumptions, debate interpretations. You must never lose sight of the qualitative vision and user empathy - the data is there to augment it, not replace it. That's why you use data to inform your decisions, not drive them.” - comment from Assaph Mehr on a post I did on this topic.

Data should increase your decision quality, but it’s not a silver bullet (you might realize nothing is!)

I’ll give credit where it’s due though. For some companies, this is the exact advice they need to hear. Going from no data and gut-feel, to imperfect data is undoubtedly an improvement. But the problem is that I see this taken to the extreme.

Ok, let’s get tangible now.

Let’s jump into ways to reduce bias and increase decision quality!

1. Work cross-functionally.

Avoiding silos and getting a diverse perspective is a great way to avoid biases.

This is why having only designers do discovery is a bad idea.

A simple rule of thumb is to try not to do activities like gathering data, sythesis or interpreting insights alone.

I always think of desirability, viability and feasibility here. Do we have a good representation of each?

It’s one of the reasons why the product trios are such a powerful concept and why I’m such a big advocate for engineering to be involved in discovery!

2. Falsification > Confirmation

One of my go-to principles for product work, especially discovery, is to “come up with ideas like you’re right, but test them like you’re wrong.”

Taking this one step further, Marc Randolph, co-founder of Netflix believes there’s no such thing as a great idea, “every idea is a bad idea”, you just don’t know why yet.

“I am firmly convinced there’s no such thing as a good idea. Every idea is a bad idea. No idea performs the way you expect once you collide it with reality.” - Marc Randolph

In his interview on the Diary of a CEO Randolph shared the story of Netflix and how their most powerful tool was asking; “Why is this a terrible idea?”

As Reed Hastings and himself came up with new business ideas they would go through all the reasons why it was a bad idea.

For example, when they originally came up with Nextflix, they discarded that idea as VHS were too big to fit in the post and would likely get damaged.

However, months later, the invention of DVDs happened, and that led them to revisit the idea. That morning in fact, on the way to work, Randolph and Hastings tested the idea by buying a CD to replicate a DVD and mailed it to themselves.

If the CD arrived broken, then they were right, it’s still a bad idea. But if not, maybe there was something there.

Sure enough, the CD arrived undamaged, and the rest is history.

This is a powerful mindset to have and a great way to avoid biases like confirmation bias, halo effect, optimism bias, etc.

Aim for falsification over validation.

Approach discovery and gathering data as trying to prove myself WRONG, not right.

3. Multiple data points

Another habit I’ve forced myself to develop is gathering multiple data points.

It’s easy to bend a single piece of data to conform to your beliefs, but much harder to do that across multiple.

It also helps identify potential biases and false positives.

That’s why there’s the saying; “when the qual (qualitative data) and the quant (quantitative data) don’t confirm, the qual is usually right."

Anthony Marter shared a great example of this in his last gig in a comment to one of my posts:

“I also always recommend to balance quant with qual information. A good example is that I used FullStory in my last gig - it does basic product analytics in addition to its core competence of screen flow recording. Quite frequently I'd draw a conclusion based on something the metrics were telling me, but then I'd watch half a dozen screen recordings from users in the metric to see what they were actually doing and rapid realise that the conclusion I'd drawn was very very incorrect!” - comment from Anthony Marter

It’s why, at a minimum, we advocate for a mix of qual and quant.

Getting more advanced, you can bring in other data points as well.

I’m currently working with a team that owns an internal product and we were planning out our discovery activities the other day. We decided to ask both the risk and compliance teams, plus the front-line staff who are the end users, what the legal documents say and gather some data on what is happening in practice (mix of observation and usage data).

That’s 4 to 5 different data sources for the one thing we’re trying to learn. And perhaps that’s overkill but I can bet you now that they won’t all agree with each other!

It is dangerous to take one person’s view as gospel - especially your own!

4. Reflection

Another great way to improve your decision quality over time is to revisit past decisions and reflect on them: what went wrong? What went right? What would you do differently next time?

Include data in this reflection. Was the data correct? Did it lead you in the right direction or lead you astray?

If the data and reality didn’t align, why? What would you do differently this time? What might have been the cause?

5. Become bias aware

Lastly, I believe one of the best things I’ve done in my career is to learn more about different biases and try to become more aware of them.

Couple this with the previous point and you can start to identify moments where you might be falling into certain baises.

Some top ones relevant to this topic:

To illustrate this in practice. I've recently been working with a Head of Product on a 1-on-1 coaching basis who was looking at a bunch of research that was done before he joined about a new product line they were looking into. This new product would attract a new customer segment. Unfortunately, the research was done by interviewing recent customers = selection bias.

The problem here is that their current customers are not the target for this new product, and while I’m sure some of the data was valid, it’s hard to trust it when it’s coming from people who aren’t the target.

Final Thoughts

The key message to take away from this is to strive to be data-informed, not driven.

Whilst data is great and I’m not trying to devalue it - because maybe data-driven would be an improvement from where you are today - be mindful that it’s not as objective as you may want it to be. It’s not perfect.

This is where I struggle a bit with the idea of AI taking over strategy and prioritization, etc.

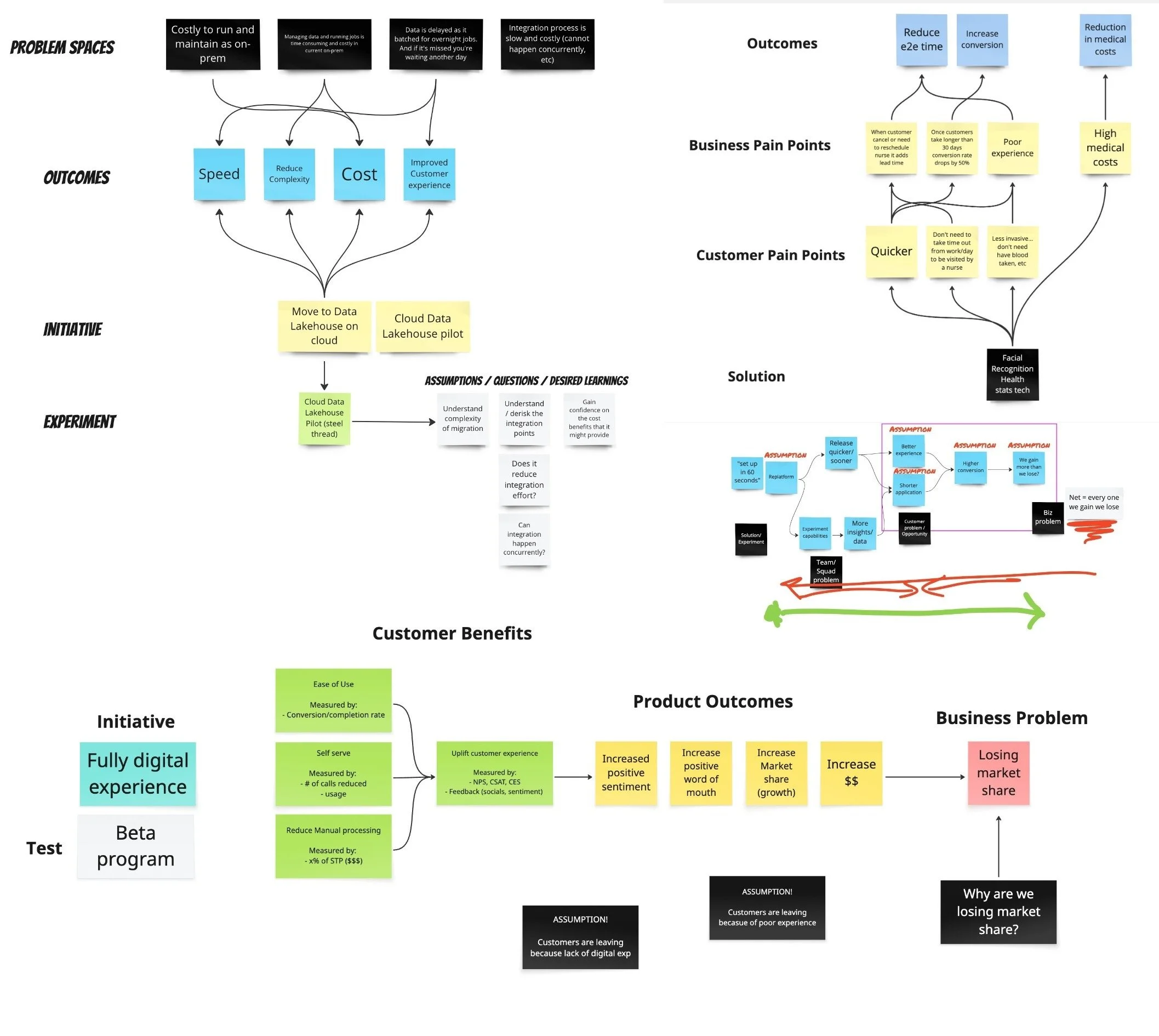

Sure, I think there are aspects where it can help, like this test I did in Miro the other day with a bunch of data from a Van Westerndrop’s price sensitivity test I ran. Miro did a great job synthesizing the data (this would have taken me about 30 minutes to crunch)

Using Miro’s new AI to synthesize data from a price survey I did.

But the output is only as reliable as the quality of the data that goes in.

You also still need to overlay strategy and consider the subjective elements - it even calls that out by saying, “Further market testing and analysis may be required to refine this pricing strategy based on additional factors such as competition and market conditions.”

So it’s dangerous to take any output at face value and run with it.

For example, whilst I got a decent number of responses for this price sensitivity test and I also had screening questions to ensure that those responses were within my target audience, it’s still not an adequate sample size.

There was also a big swing in price based on geo-location which is expected as price sensitivity has a lot to do with social-economical factors like cost of living, salaries, etc which can all vary a great deal in different parts of the world - just look at this data collected by Product Management Festival on product salaries (from $37k to $167k 😱)

Credit: PMF 2022. Average Product Management Salaries by country

Lastly, it’s a spectrum.

Some data you’re going to have more confidence in (live AB test vs early stage interviews) and others less as they might be prone to more biases or simply a small sample size.

Perhaps one day, AI will become smart enough to recognize these elements (strategy is another conversation) but until then, I’ll continue to take all this as an input to decision-making and not the decision itself.

Hope that’s helpful!

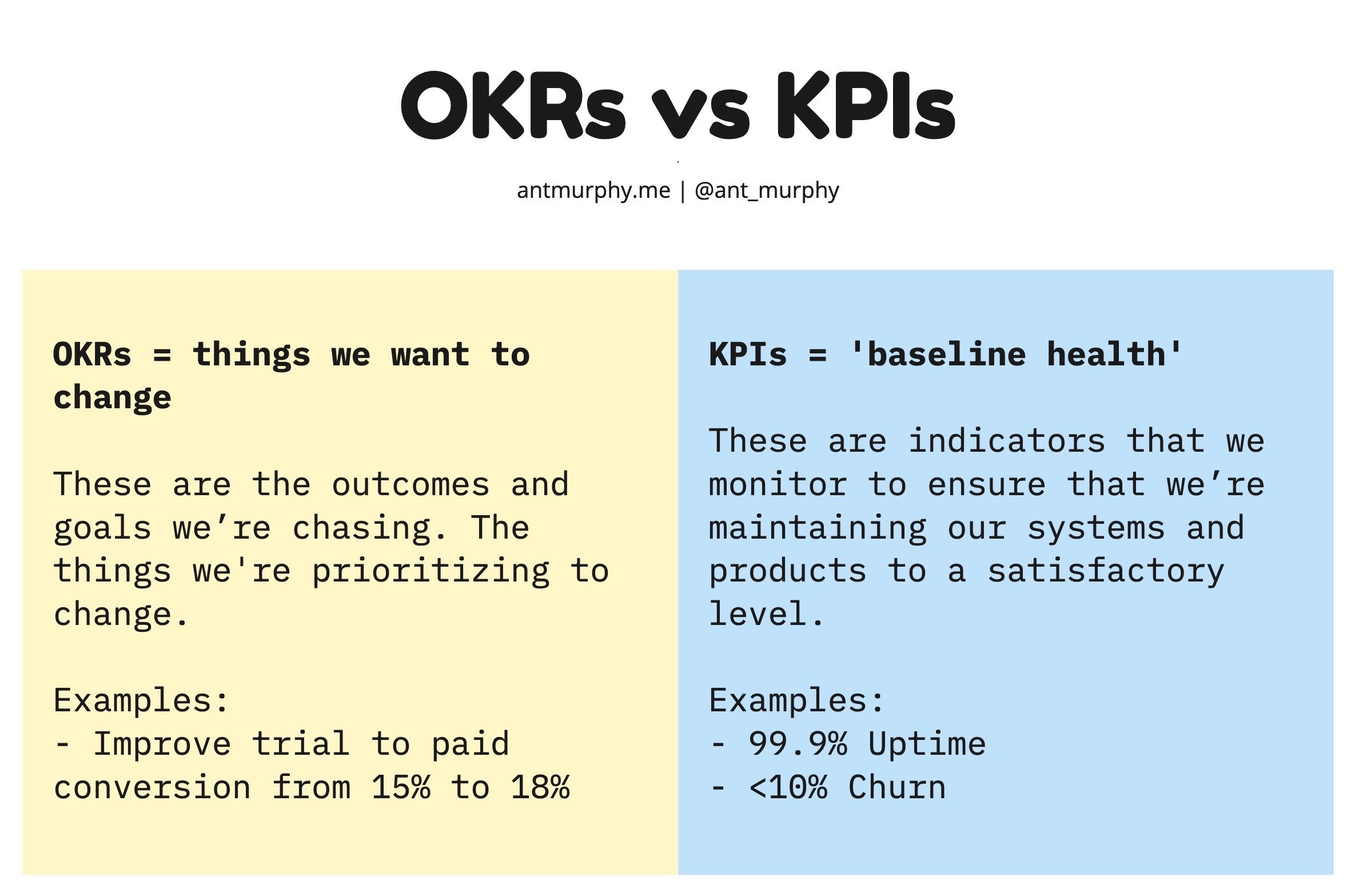

Your OKRs don’t live in a vacuum.

Yet this is exactly how I see many organizations treat their OKRs.

They jump on the bandwagon and create OKRs void of any context.

Here’s what I see all the time…